How to make GitHub Actions 22x faster with bare-metal Arm

GitHub doesn't provide hosted Arm runners, so how can you use native Arm runners safely & securely?

GitHub Actions is a modern, fast and efficient way to build and test software, with free runners available. We use the free runners for various open source projects and are generally very pleased with them, after all, who can argue with good enough and free? But one of the main caveats is that GitHub's hosted runners don't yet support the Arm architecture.

So many people turn to software-based emulation using QEMU. QEMU is tricky to set up, and requires specific code and tricks if you want to use software in a standard way, without modifying it. But QEMU is great when it runs with hardware acceleration. Unfortunately, the hosted runners on GitHub do not have KVM available, so builds tend to be incredibly slow, and I mean so slow that it's going to distract you and your team from your work.

This was even more evident when Frederic Branczyk tweeted about his experience with QEMU on GitHub Actions for his open source observability project named Parca.

Does anyone have a @github actions self-hosted runner manifest for me to throw at a @kubernetesio cluster? I'm tired of waiting for emulated arm64 CI runs taking ages.

— Frederic 🧊 Branczyk @brancz@hachyderm.io (@fredbrancz) October 19, 2022

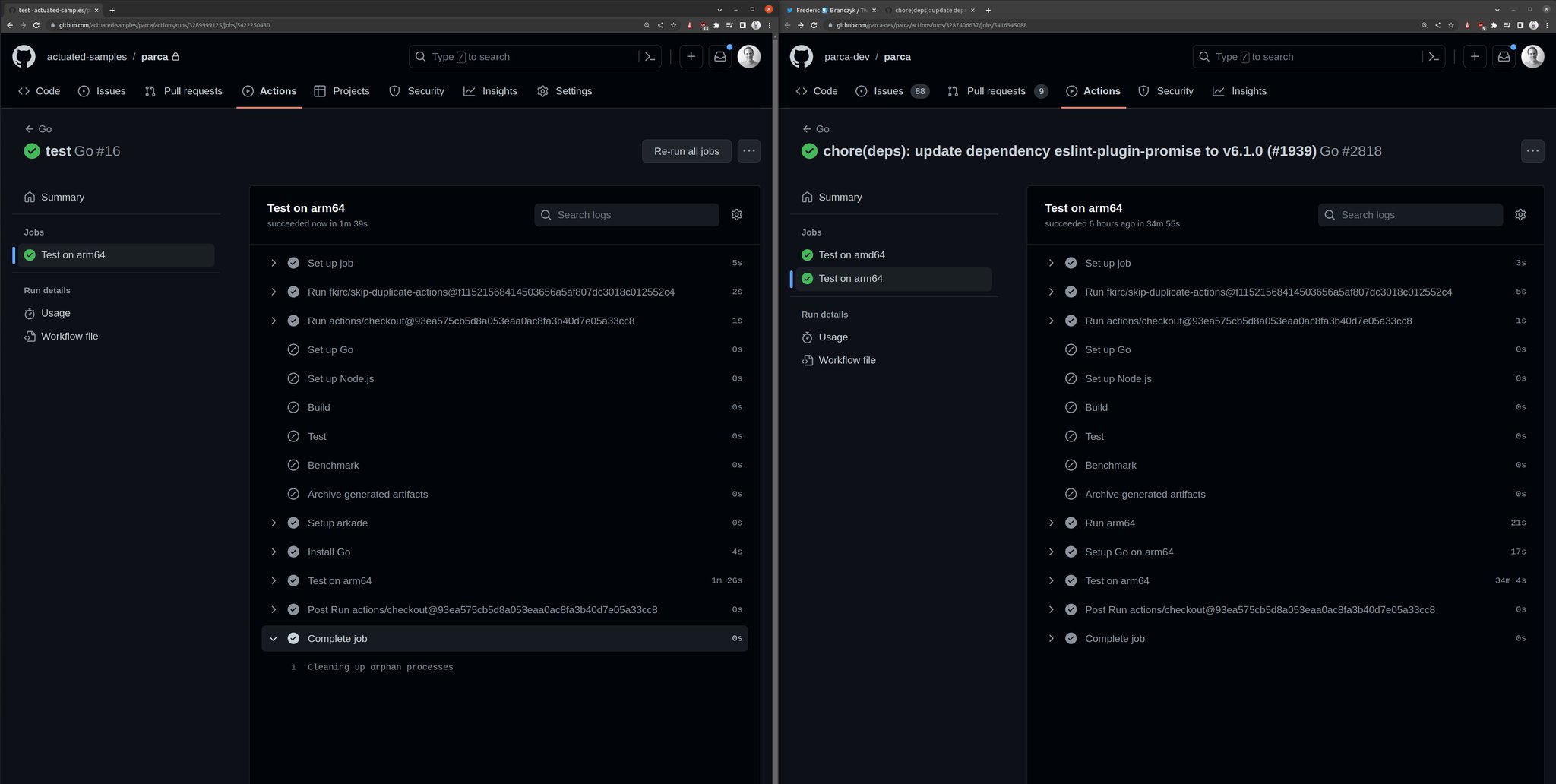

I checked out his build and expected "ages" to mean 3 minutes, in fact, it meant 33.5 minutes. I know because I forked his project and ran a test build.

After migrating it to actuated and one of our build agents, the time dropped to 1 minute and 26 seconds, a 22x improvement for zero effort.

This morning @fredbrancz said that his ARM64 build was taking 33 minutes using QEMU in a GitHub Action and a hosted runner.

— Alex Ellis (@alexellisuk) October 20, 2022

I ran it on @selfactuated using an ARM64 machine and a microVM.

That took the time down to 1m 26s!! About a 22x speed increase. https://t.co/zwF3j08vEV pic.twitter.com/ps21An7B9B

You can see the results here:

As a general rule, the download speed is going to be roughly the same with a hosted runner, it may even be slightly faster due to the connection speed of Azure's network.

But the compilation times speak for themselves - in the Parca build, go test was being run with QEMU. Moving it to run on the ARM64 host directly, resulted in the marked increase in speed. In fact, the team had introduced lots of complicated code to try and set up a Docker container to use QEMU, all that could be stripped out, replacing it with a very standard looking test step:

- name: Run the go tests

run: go test ./...

Can't I just install the self-hosted runner on an Arm VM?

There are relatively cheap Arm VMs available from Oracle OCI, Google and Azure based upon the Ampere Altra CPU. AWS have their own Arm VMs available in the Graviton line.

So why shouldn't you just go ahead and install the runner and add them to your repos?

The moment you do that you run into three issues:

- You now have to maintain the software packages installed on that machine

- If you use KinD or Docker, you're going to run into conflicts between builds

- Out of the box scheduling is poor - by default it only runs one build at a time there

Chasing your tail with package updates, faulty builds due to caching and conflicts is not fun, you may feel like you're saving money, but you are paying with your time and if you have a team, you're paying with their time too.

Most importantly, GitHub say that it cannot be used safely with a public repository. There's no security isolation, and state can be left over from one build to the next, including harmful code left intentionally by bad actors, or accidentally from malware.

So how do we get to a safer, more efficient Arm runner?

The answer is to get us as close as possible to a hosted runner, but with the benefits of a self-hosted runner.

That's where actuated comes in.

We run a SaaS that manages bare-metal for you, and talks to GitHub upon your behalf to schedule jobs efficiently.

- No need to maintain software, we do that for you with an automated OS image

- We use microVMs to isolate builds from each other

- Every build is immutable and uses a clean environment

- We can schedule multiple builds at once without side-effects

microVMs on Arm require a bare-metal server, and we have tested all the options available to us. Note that the Arm VMs discussed above do not currently support KVM or nested virtualisation.

- a1.metal on AWS - 16 cores / 32GB RAM - 300 USD / mo

- c3.large.arm64 from Equinix Metal with 80 Cores and 256GB RAM - 2.5 USD / hr

- RX-Line from Hetzner with 128GB / 256GB RAM, NVMe & 80 cores for approx 200-250 EUR / mo.

- Mac Mini M1 - 8 cores / 16GB RAM - tested with Asahi Linux - one-time payment of ~ 1500 USD

If you're already an AWS customer, the a1.metal is a good place to start. If you need expert support, networking and a high speed uplink, you can't beat Equinix Metal (we have access to hardware there and can help you get started) - you can even pay per minute and provision machines via API. The Mac Mini <1 has a really fast NVMe and we're running one of these with Asahi Linux for our own Kernel builds for actuated. The RX Line from Hetzner has serious power and is really quite affordable, but just be aware that you're limited to a 1Gbps connection, a setup fee and monthly commitment, unless you pay significantly more.

I even tried Frederic's Parca job on my 8GB Raspberry Pi with a USB NVMe. Why even bother, do I hear you say? Well for a one-time payment of 80 USD, it was 26m30s quicker than a hosted runner with QEMU!

Learn how to connect an NVMe over USB-C to your Raspberry Pi 4

What does an Arm job look like?

Since I first started trying to build code for Arm in 2015, I noticed a group of people who had a passion for this efficient CPU and platform. They would show up on GitHub issue trackers, ready to send patches, get access to hardware and test out new features on Arm chips. It was a tough time, and we should all be grateful for their efforts which go largely unrecognised.

If you're looking to make your software compatible with Arm, feel free to reach out to me via Twitter.

In 2020 when Apple released their M1 chip, Arm went mainstream, and projects that had been putting off Arm support like KinD and Minikube, finally had that extra push to get it done.

I've had several calls with teams who use Docker on their M1/M2 Macs exclusively, meaning they build only Arm binaries and use only Arm images from the Docker Hub. Some of them even ship to project using Arm images, but I think we're still a little behind the curve there.

That means Kubernetes - KinD/Minikube/K3s and Docker - including Buildkit, compose etc, all work out of the box.

I'm going to use the arkade CLI to download KinD and kubectl, however you can absolutely find the download links and do all this manually. I don't recommend it!

name: e2e-kind-test

on: push

jobs:

start-kind:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@master

with:

fetch-depth: 1

- name: get arkade

uses: alexellis/setup-arkade@v1

- name: get kubectl and kubectl

uses: alexellis/arkade-get@master

with:

kubectl: latest

kind: latest

- name: Create a KinD cluster

run: |

mkdir -p $HOME/.kube/

kind create cluster --wait 300s

- name: Wait until CoreDNS is ready

run: |

kubectl rollout status deploy/coredns -n kube-system --timeout=300s

- name: Explore nodes

run: kubectl get nodes -o wide

- name: Explore pods

run: kubectl get pod -A -o wide

That's our x86_64 build, or Intel/AMD build that will run on a hosted runner, but will be kind of slow.

Let's convert it to run on an actuated ARM64 runner:

jobs:

start-kind:

- runs-on: ubuntu-latest

+ runs-on: actuated-aarch64

That's it, we've changed the runner type and we're ready to go.

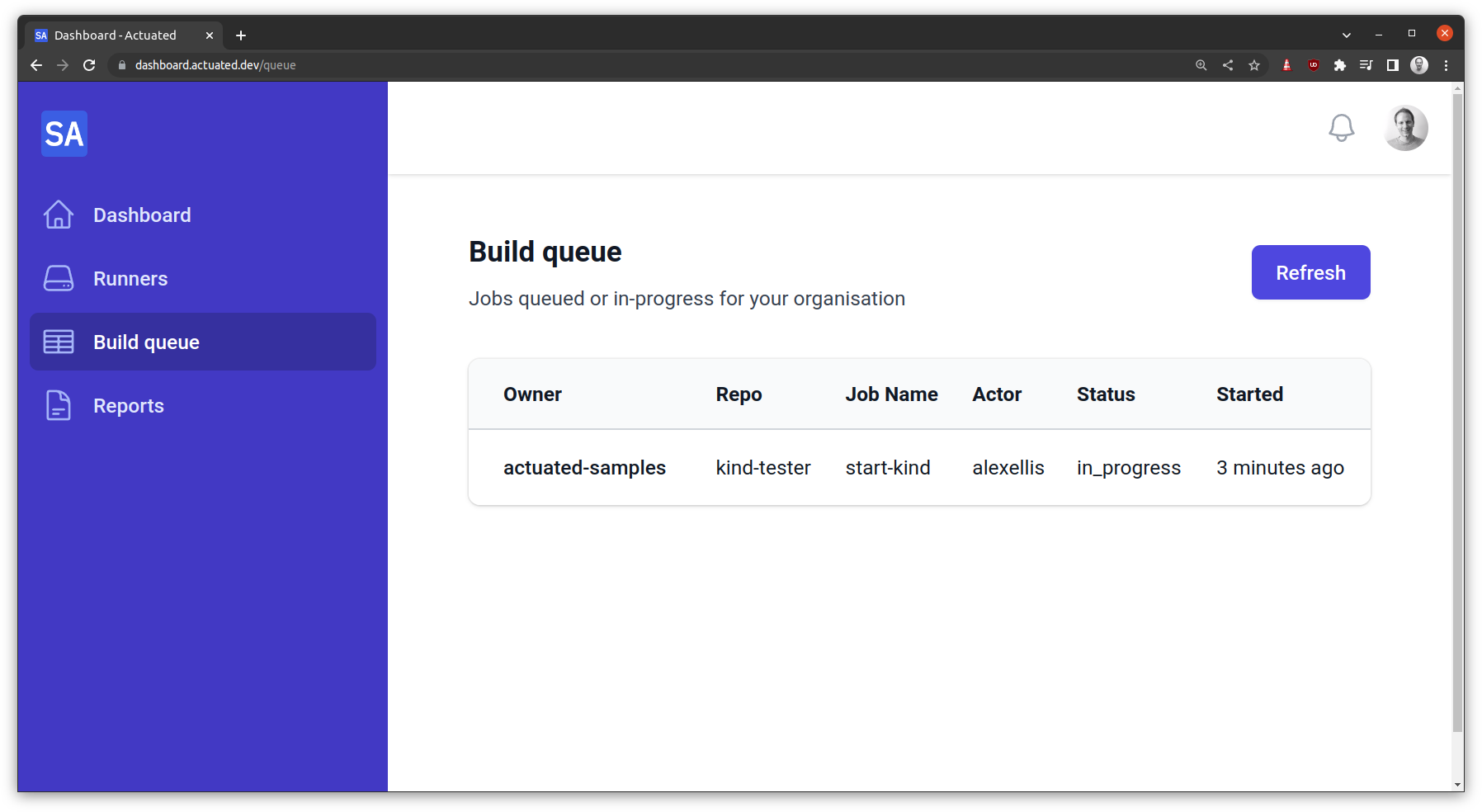

An in progress build on the dashboard

Behind the scenes, actuated, the SaaS schedules the build on a bare-metal ARM64 server, the boot up takes less than 1 second, and then the standard GitHub Actions Runner talks securely to GitHub to run the build. The build is isolated from other builds, and the runner is destroyed after the build is complete.

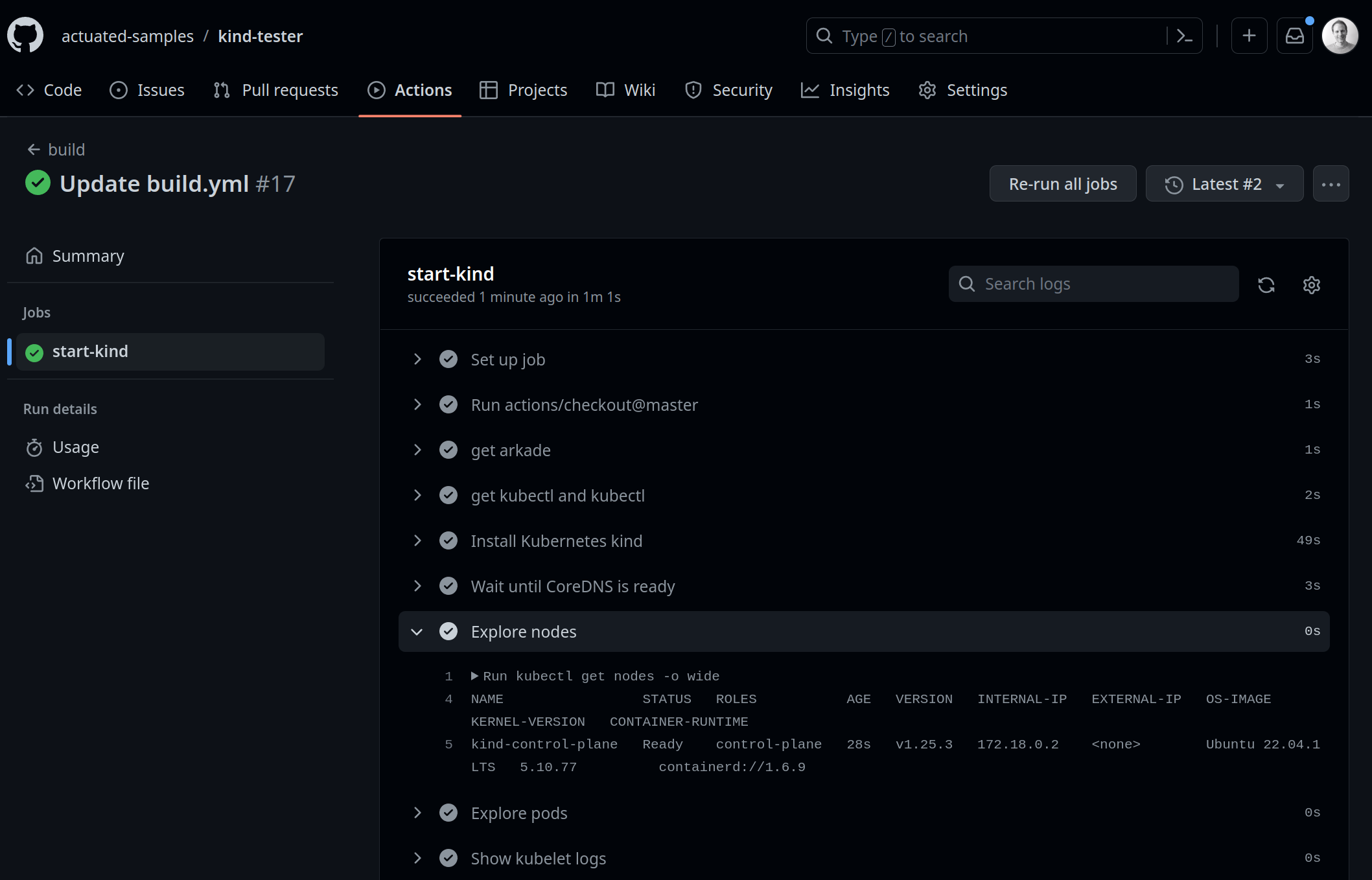

Setting up an Arm KinD cluster took about 49s

Setting up an Arm KinD cluster took about 49s, then it's over to you to test your Arm images, or binaries.

If I were setting up CI and needed to test software on both Arm and x86_64, then I'd probably create two separate builds, one for each architecture, with a runs-on label of actuated and actuated-aarch64 respectively.

Do you need to test multiple versions of Kubernetes? Let's face it, it changes so often, that who doesn't need to do that. You can use the matrix feature to test multiple versions of Kubernetes on Arm and x86_64.

I show 5x clusters being launched in parallel in the video below:

Demo - Actuated - secure, isolated CI for containers and Kubernetes

What about Docker?

Docker comes pre-installed in the actuated OS images, so you can simply use docker build, without any need to install extra tools like Buildx, or to have to worry about multi-arch Dockerfiles. Although these are always good to have, and are available out of the box in OpenFaaS, if you're curious what a multi-arch Dockerfile looks like.

Wrapping up

Building on bare-metal Arm hosts is more secure because side effects cannot be left over between builds, even if malware is installed by a bad actor. It's more efficient because you can run multiple builds at once, and you can use the latest software with our automated Operating System image. Enabling actuated on a build is as simple as changing the runner type.

And as you've seen from the example with the OSS Parca project, moving to a native Arm server can improve speed by 22x, shaving off a massive 34 minutes per build.

Who wouldn't want that?

Parca isn't a one-off, I was also told by Connor Hicks from Suborbital that they have an Arm build that takes a good 45 minutes due to using QEMU.

Just a couple of days ago Ed Warnicke, Distinguished Engineer at Cisco reached out to us to pilot actuated. Why?

Ed, who had Network Service Mesh in mind said:

I'd kill for proper Arm support. I'd love to be able to build our many containers for Arm natively, and run our KIND based testing on Arm natively. We want to build for Arm - Arm builds is what brought us to actuated

So if that sounds like where you are, reach out to us and we'll get you set up.

Additional links: