Bring Your Own Metal Case Study with GitHub Actions

See how BYO bare-metal made a 6 hour GitHub Actions build complete 25x faster.

I'm going to show you how both a regular x86_64 build and an Arm build were made dramatically faster by using Bring Your Own (BYO) bare-metal servers.

At the early stage of a project, GitHub's standard runners with 2x cores, 8GB RAM, and a little free disk space are perfect because they're free for public repos. For private repos they come in at a modest cost, if you keep your usage low.

What's not to love?

Well, Ed Warnicke, Distinguished Engineer at Cisco contacted me a few weeks ago and told me about the VPP project, and some of the problems he was running into trying to build it with hosted runners.

The Fast Data Project (FD.io) is an open-source project aimed at providing the world's fastest and most secure networking data plane through Vector Packet Processing (VPP).

Whilst VPP can be used as a stand-alone project, it is also a key component in the Cloud Computing Foundation's (CNCF's) Open Source Network Service Mesh project.

There were two issues:

-

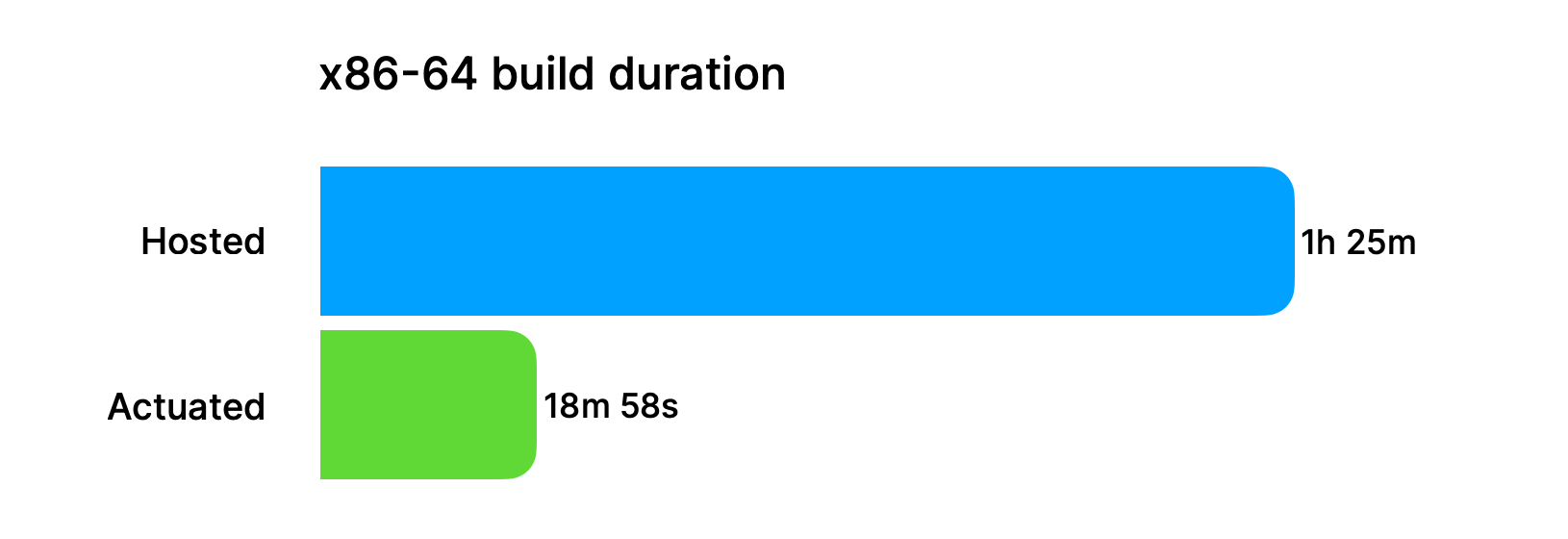

The x86_64 build was taking 1 hour 25 minutes on a standard runner.

Why is that a problem? CI is meant to both validate against regression, but to build binaries for releases. If that process can take 50 minutes before failing, it's incredibly frustrating. For an open source project, it's actively hostile to contributors.

-

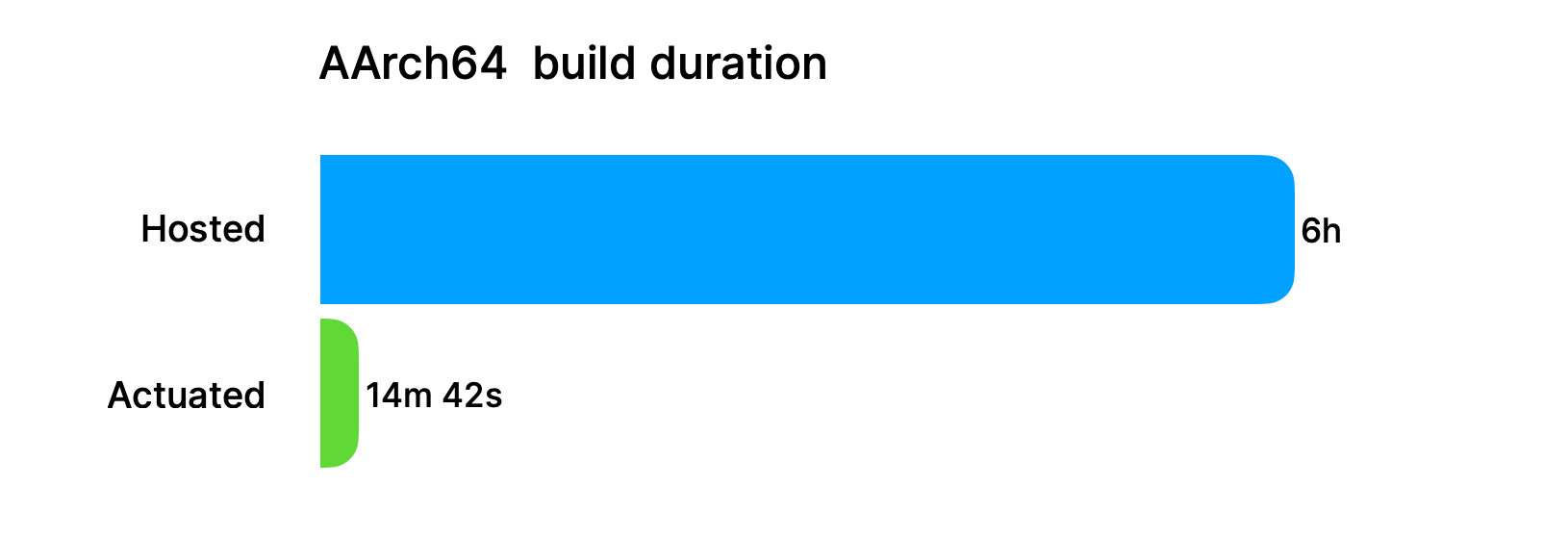

The Arm build was hitting the 6 hour limit for GitHub Actions then failing

Why? Well it was using QEMU, and I've spoken about this in the past - QEMU is a brilliant, zero cost way to build Arm binaries on a regular machine, but it's slow. And you'll see just how slow in the examples below, including where my Raspberry Pi beat a GitHub runner.

We explain how to use QEMU in Docker Actions in the following blog post:

The efficient way to publish multi-arch containers from GitHub Actions

Rubbing some bare-metal on it

So GitHub does actually have a beta going for "larger runners", and if Ed wanted to try that out, he'd have to apply to a beta waitlist, upgrade to a Team or Enterprise Plan, and then pick a new runner size.

But that wouldn't have covered him for the Arm build, GitHub don't have any support there right now. I'm sure it will come one, day, but here we are unable to release binaries for our Arm users.

With actuated, we have no interest in competing with GitHub's business model of selling compute on demand. We want to do something more unique than that - we want to enable you to bring your own (BYO) devices and then use them as runners, with VM-level isolation and one-shot runners.

What does Bring Your Own (BYO) mean?

"Your Own" does not have to mean physical ownership. You do not need to own a datacenter, or to send off a dozen Mac Minis to a Colo. You can provision bare-metal servers on AWS or with Equinix Metal as quickly as you can get an EC2 instance. Actually, bare-metal isn't strictly needed at all, and even DigitalOcean's and Azure's VMs will work with actuated because they support KVM, which we use to launch Firecracker.

And who is behind actuated? We are a nimble team, but have a pedigree with Cloud Native and self-hosted software going back 6-7 years from OpenFaaS. OpenFaaS is a well known serverless platform which is used widely in production by commercial companies including Fortune 500s.

Actuated uses a Bring Your Own (BYO) server model, but there's very little for you to do once you've installed the actuated agent.

Here's how to set up the agent software: Actuated Docs: Install the Agent.

You then get detailed stats about each runner, the build queue and insights across your whole GitHub organisation, in one place:

Actuated now aggregates usage data at the organisation level, so you can get insights and spot changes in behaviour.

— Alex Ellis (@alexellisuk) March 7, 2023

This peak of 57 jobs was when I was quashing CVEs for @openfaas Pro customers in Alpine Linux and a bunch of Go https://t.co/a84wLNYYjo… https://t.co/URaxgMoQGW pic.twitter.com/IuPQUjyiAY

First up - x86_64

I forked Ed's repo into the "actuated-samples" repo, and edited the "runs-on:" field from "ubuntu-latest" to "actuated".

The build which previously took 1 hour 25 minutes now took 18 minutes 58 seconds. That's a 4.4x improvement.

4.4x doesn't sound like a big number, but look at the actual number.

It used to take well over an hour to get feedback, now you get it in less than 20 minutes.

And for context, this x86_64 build took 17 minutes to build on Ed's laptop, with some existing caches in place.

I used an Equinix Metal m3.small.x86 server, which has 8x Intel Xeon E-2378G cores @ 2.8 GHz. It also comes with a local SSD, local NVMe would have been faster here.

The Firecracker VM that was launched had 12GB of RAM and 8x vCPUs allocated.

Next up - Arm

For the Arm build I created a new branch and had to change a few hard-coded references from "_amd64.deb" to "_arm64.deb" and then I was able to run the build. This is common enablement work. I've been doing Arm enablement for Cloud Native and OSS since 2015, so I'm very used to spotting this kind of thing.

So the build took 6 hours, and didn't even complete when running with QEMU.

How long did it take on bare-metal? 14 minutes 28 seconds.

That's a 25x improvement.

The Firecracker VM that we launched had 16GB of RAM and 8x vCPUs allocated.

It was running on a Mac Mini M1 configured with 16GB RAM, running with Asahi Linux. I bought it for development and testing, as a one-off cost, and it's a very fast machine.

But, this case-study is not specifically about using consumer hardware, or hardware plugged in under your desk.

Equinix Metal and Hetzner both have the Ampere Altra bare-metal server available on either an hourly or monthly basis, and AWS customers can get access to the a1.metal instance on an hourly basis too.

To prove the point, that BYO means cloud servers, just as much as physically owned machines, I also ran the same build on an Ampere Altra from Equinix Metal with 20 GB of RAM, and 32 vCPUs, it completed in 9 minutes 39 seconds.

See our hosting recommendations: Actuated Docs: Provision a Server

In October last year, I benchmarked a Raspberry Pi 4 as an actuated server and pitted it directly against QEMU and GitHub's Hosted runners.

It was 24 minutes faster. That's how bad using QEMU can be instead of using bare-metal Arm.

Then, just for run I scheduled the MicroVM on my @Raspberry_Pi instead of an @equinixmetal machine.

— Alex Ellis (@alexellisuk) October 20, 2022

Poor little thing has 8GB RAM and 4 Cores with an SSD connected over USB-C.

Anyway, it still beat QEMU by 24 minutes! pic.twitter.com/ITyRpbnwEE

Wrapping up

So, wrapping up - if you only build x86_64, and have very few build minutes, and are willing to upgrade to a Team or Enterprise Plan on GitHub, "faster runners" may be an option you want to consider.

If you don't want to worry about how many minutes you're going to use, or surprise bills because your team got more productive, or grew in size, or is finally running those 2 hour E2E tests every night, then actuated may be faster and better value overall for you.

But if you need Arm runners, and want to use them with public repos, then there are not many options for you which are going to be secure and easy to manage.

A recap on the results

You can see the builds here:

x86_64 - 4.4x improvement

- Before: 1 hour 25 minutes - x86_64 build on a hosted runner

- After: 18 minutes - 58 seconds x86_64 build on Equinix Metal

Arm - 25x improvement

- Before: 6 hours (and failing) - Arm/QEMU build on a hosted runner

- After: 14 minutes 28 seconds - Arm build on Mac Mini M1

Want to work with us?

Want to get in touch with us and try out actuated for your team?

We're looking for pilot customers who want to speed up their builds, or make self-hosted runners simpler to manager, and ultimately, about as secure as they're going to get with MicroVM isolation.

Set up a 30 min call with me to ask any questions you may have and find out next steps.

Learn more about how it compares to other solutions in the FAQ: Actuated FAQ

See also: